配置更新yum源:

wget http://download.ceph.com/rpm-hammer/el6/noarch/ceph-release-1-0.el6.noarch.rpm wget https://dl.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm rpm -ivh ceph-release-1-0.el6.noarch.rpm rpm -ivh epel-release-6-8.noarch.rpm yum cleanl all yum update

安装ceph:

yum -y install leveldb rpm -ivh librados2-0.73-0.el6.x86_64.rpm rpm -ivh librbd1-0.73-0.el6.x86_64.rpm rpm -ivh libcephfs1-0.73-0.el6.x86_64.rpm rpm -ivh libcephfs_jni1-0.73-0.el6.x86_64.rpm rpm -ivh rest-bench-0.73-0.el6.x86_64.rpm yum -y install python-flask rpm -ivh python-ceph-0.73-0.el6.x86_64.rpm rpm -ivh cephfs-java-0.73-0.el6.x86_64.rpm yum -y install gperftools-libs rpm -ivh ceph-0.73-0.el6.x86_64.rpm rpm -ivh ceph-devel-0.73-0.el6.x86_64.rpm rpm -ivh ceph-fuse-0.73-0.el6.x86_64.rpm rpm -ivh rbd-fuse-0.73-0.el6.x86_64.rpm rpm -ivh ceph-test-0.73-0.el6.x86_64.rpm yum -y install fcgi rpm -ivh ceph-radosgw-0.73-0.el6.x86_64.rpm

集群配置文件:

mkdir -p /etc/ceph cd /etc/ceph/ vim ceph.conf

[global] fsid = 56dd83c2-af48-4288-b839-89047a3fcaab public network = 10.100.0.0/16 osd pool default min size = 1 osd pool default pg num = 128 osd pool default pgp num = 128 osd journal size = 1024 [mon] mon initial members = ceph-node1 mon host = ceph-node1,ceph-node2,ceph-node3 mon addr = 10.100.20.41,10.100.20.68,10.100.20.61 [mon.ceph-node1] host = ceph-node1 mon addr = 10.100.20.41

创建集群密钥环,生成monitor密钥:

ceph-authtool --create-keyring /tmp/ceph.mon.keyring --gen-key -n mon. --cap mon 'allow *'

创建一个client.admin用户,并添加到密钥中:

ceph-authtool --create-keyring /etc/ceph/ceph.client.admin.keyring --gen-key -n client.admin --set-uid=0 --cap mon 'allow *' --cap osd 'allow *' --cap mds 'allow'

将client.admin密钥添加到ceph.mon.keyring中:

ceph-authtool /tmp/ceph.mon.keyring --import-keyring ceph.client.admin.keyring

生成monitor map:

monmaptool --create --add ceph-node1 10.100.20.41 --fsid 56dd83c2-af48-4288-b839-89047a3fcaab /tmp/monmap

为monitor创建类似/path/cluster_name-monitor_node格式的目录:

mkdir /var/lib/ceph/mon/ceph-ceph-node1

启动monitor守护进程信息:

ceph-mon --mkfs -i ceph-node1 --monmap /tmp/monmap --keyring /tmp/ceph.mon.keyring

启动ceph服务:

service ceph start

查看集群状态:

ceph status

查看默认池:

ceph osd lspools

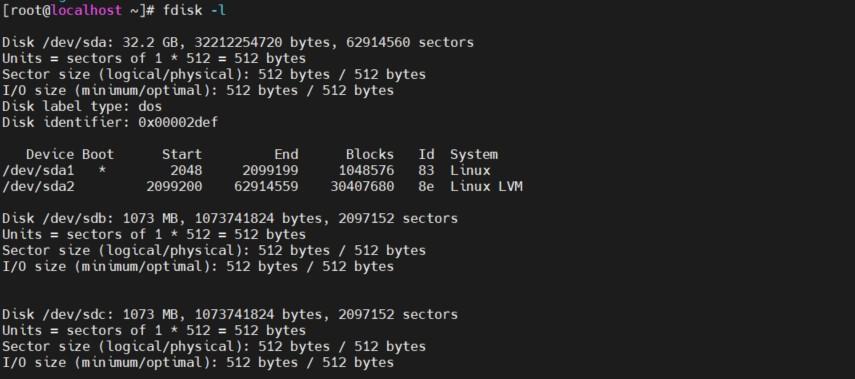

查看磁盘:

ceph-disk list

创建OSD

创建GPT分区标记:

parted /dev/sdb mklabel GPT parted /dev/sdc mklabel GPT parted /dev/sdd mklabel GPT

OSD磁盘准备:

默认两个xfs分区,data1分区=90%,log2分区=10%

ceph-disk prepare --cluster ceph --cluster-uuid 56dd83c2-af48-4288-b839-89047a3fcaab --fs-type xfs /dev/sdb ceph-disk prepare --cluster ceph --cluster-uuid 56dd83c2-af48-4288-b839-89047a3fcaab --fs-type xfs /dev/sdc ceph-disk prepare --cluster ceph --cluster-uuid 56dd83c2-af48-4288-b839-89047a3fcaab --fs-type xfs /dev/sdd

磁盘分区准备:

ceph-disk prepare /dev/sdb1 ceph-disk prepare /dev/sdc1 ceph-disk prepare /dev/sdd1

激活OSD分区:

ceph-disk activate /dev/sdb1 ceph-disk activate /dev/sdc1 ceph-disk activate /dev/sdd1

复制密钥到集群其他节点:

rsync -av --progress /etc/ceph/ceph.client.admin.keyring root@ceph-node2:/etc/ceph/ rsync -av --progress /et/ceph/ceph.conf root@ceph-node2:/etc/ceph/ rsync -av --progress /etc/ceph/ceph.client.admin.keyring root@ceph-node3:/etc/ceph/ rsync -av --progress /et/ceph/ceph.conf root@ceph-node3:/etc/ceph/

添加monitor:同步时间指定集群的NTP服务器

在ceph-node2上操作:

mkdir /var/lib/ceph/mon/ceph-ceph-node2/ /tmp/ceph-node2

新增ceph-node2监控参数:

cd /etc/ceph/ vim /etc/ceph/ceph.conf

[global] fsid = f4a24967-02fd-48d4-b7c0-7c66e981ba2b public network = 10.100.0.0/16 osd pool default min size = 1 osd pool default pg num = 128 osd pool default pgp num = 128 osd journal size = 1024 [mon] mon initial members = ceph-node1 mon host = ceph-node1,ceph-node2,ceph-node3 mon addr = 10.100.20.41,10.100.20.68,10.100.20.61 [mon.ceph-node1] host = ceph-node1 mon addr = 10.100.20.41 [mon.ceph-node2] mon_addr = 10.100.20.68:6789 host = ceph-node2

从ceph集群中提取密钥环信息:

ceph auth get mon. -o /tmp/ceph-node2/monkeyring

从ceph集群中获取monitor map信息:

ceph mon getmap -o /tmp/ceph-node2/monmap

使用密钥环和已有的monmao,构建一个新的monitor,即fs:

ceph-mon -i ceph-node2 --mkfs --monmap /tmp/ceph-node2/monmap --keyring /tmp/ceph-node2/monkeyring

启动ceph服务:

service ceph start

添加新的monitor到集群中:

ceph mon add ceph-node2 10.100.20.68:6789

添加OSD和ceph-node1步骤一致

新增ceph-node3监控参数:

cd /etc/ceph/ vim /etc/ceph/ceph.conf

[global] fsid = f4a24967-02fd-48d4-b7c0-7c66e981ba2b public network = 10.100.0.0/16 osd pool default min size = 1 osd pool default pg num = 128 osd pool default pgp num = 128 osd journal size = 1024 [mon] mon initial members = ceph-node1 mon host = ceph-node1,ceph-node2,ceph-node3 mon addr = 10.100.20.41,10.100.20.68,10.100.20.61 [mon.ceph-node1] host = ceph-node1 mon addr = 10.100.20.41 [mon.ceph-node3] mon_addr = 10.100.20.61:6789 host = ceph-node3

从ceph集群中提取密钥环信息:

ceph auth get mon. -o monkeyring

从ceph集群中获取monitor map信息:

ceph mon getmap -o monmap

使用密钥环和已有的monmao,构建一个新的monitor,即fs:

ceph-mon -i ceph-node3 --mkfs --monmap monmap --keyring monkeyring

启动ceph服务:

service ceph start

添加新的monitor到集群中:

ceph mon add ceph-node3 10.100.20.61:6789

添加OSD和ceph-node1步骤一致

网友留言: